Achronix has decided to offer the FPGA technology it has developed for its own standalone parts as a set of embeddable cores in the belief that changes in the market make the concept of inserting FPGA macros into SoCs is now viable.

Steve Mensor, vice president of marketing for Achronix, said the company sees three main targets for embeddable FPGA cores: compute-server acceleration; software-defined networking equipment; and 5G wireless infrastructure. In the immediate future, the business is “going to be mostly shared between computer and wireless,” he added.

Although embedded FPGA IP has been used in commercial SoCs only rarely up to now, Mensor said the demand for lower latency between processing blocks in a system and concerns over power consumption as well as system cost make the idea of embedded FPGA cores more attractive in these systems compared. A further change in the conditions for an approach to design that has struggled in more than 15 years since the embedded-FPGA concept first appeared is one of available density in advanced processes.

Image The Speedcore IP is supplied as collections of LUTs, memories and DSP elements

“You need a minimum of 50,000 lookup tables,” Mensor argued. He pointed to the available capacity on 16nm finFET-plus at TSMC, one of the IP’s target processes along with Intel’s, where Achronix’s standalone FPGAs are made. “People ask it we will port back to a process like 65nm. But there is not enough room to do anything meaningful on a process like that.”

Mentor claimed the company already has customers in place for the embedded FPGA cores – expecting to close $12m in revenue for them by the end of this year. “We have customers using 150,000 lookup tables. The can invest because of the value proposition they are getting.”

According to Mensor, the key to opening up a market for embedded FPGA lies in the desire to reduce the number of internal I/O lines in basestations and server blades, many of which today couple a custom SoC with an FPGA to cater for late design or in-system alterations to the hardware.

“None of the deals are closing because of cost or power. They are closing because of lower latency in these accelerator applications,” Mensor said, adding that the company is not shifting to an IP model. “We are not getting out of [discrete] FPGAs. A new generation of the Speedster is targeted for the end of next year.”

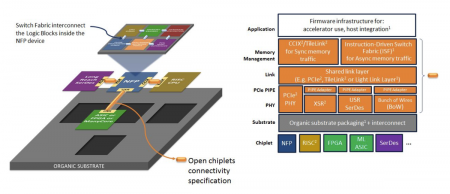

The FPGA IP is delivered as a hard macro compiled from a number of tiles. Some of those tiles can be DSP and memory-intensive or simply provide an array of programmable lookup tables. To try to simplify design, the tiles are linked through switch matrices that support a standard collection of interconnect bundles, from single wires up to octals. “All they have to do is be connected together by abutment,” Mensor said, adding that the macros would be supplied to fit the power grid used by the customer.

Steve Dodsworth, vice president of worldwide sales, said competition for embedded FPGA could come from 2.5D integration but noted equipment makers working on the 5G ramp-up seem to favour monolithic integration.

“We are doing a lot of research on 2.5D. It is definitely very important. I think the 2.5D market will emerge but the packaging technology is not quite there where it can become broad-based. The problem is if you talk about organic substrates, you are about having to use short-reach series,” said Dodsworth.

The need to deploy serdes interfaces on the dice sitting on an organic substrate means users cannot take advantage of the denser, lower-power interconnect that can be used between cores sitting on a monolithic IC.

“With silicon, you are facing yield issues,” Dodsworth added. “And you are limited in terms of substrate size to [the reticle limit of] 26mm x 34mm.

“With 2.5D, the cost point isn’t there. In wireless infrastructure, they ship a million-plus ASICs,” Dodsworth claimed, which puts the onus on monolithic integration. “But as mask costs are very high on the processes they need to use, they have to squeeze as much flexibility out of every mask start they do. Putting FPGA on the die gives them the flexibility they need because the [5G] standards aren’t there yet.”